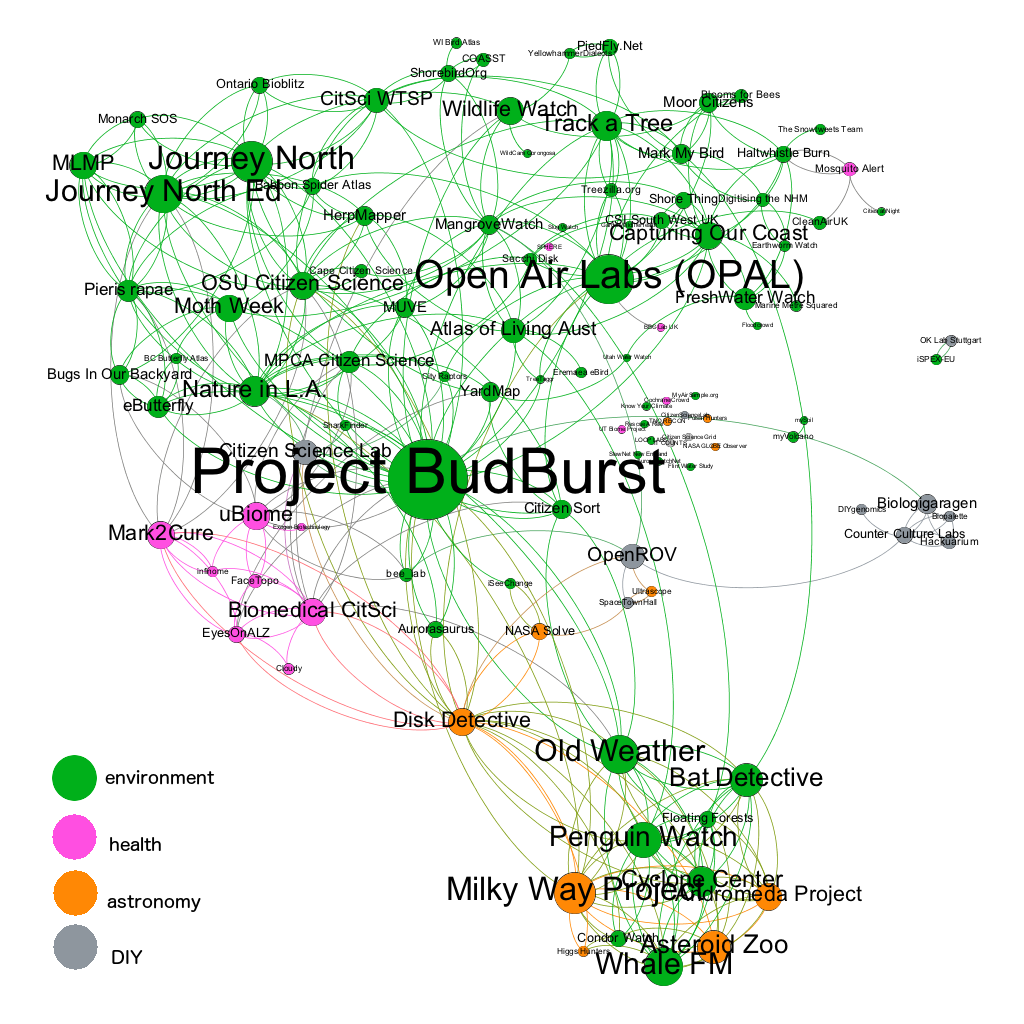

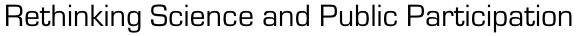

Figure 1: Followers/following links within Twitter accounts associated with the terms “citizen science.s” or “citsci” (October 2016).

Nodes sized according to their number of “citizen science” followers (followers within the dataset).

Scientometric studies of citizen science

Two empirical studies of public participation in research, including “citizen science” and related approaches, have been published earlier this year, offering new insights on what the field is made of. Both rely on a methodology borrowed from scientometrics by analyzing scientific publications that have been retrieved through keywords related to this topic in the bibliographical database Web of Science.

In a report for the French Ministry of Education and Research, Cointet & Joly show that the publications related to public participation in research have been growing from the early 2000s and can be divided in three main strands: data collection for conservation/environment/astrophysics, community-based research on health or social issues, and participatory research for agriculture or local planning. In PLoS One, Kullenberg & Kasperowski also depict the publications as growing from the 2000s with three main strands, but of another kind: data collection for conservation/ecology (a strand they share with Cointet & Joly, from which stands the label “citizen science”), data collection for geography; and social sciences studies of public participation in research processes. Both studies therefore acknowledge the recent rise of the phenomenon, as well as the importance of environmental data collection. However, they differ on what the field of public participation in research composed of.

Their differences obviously come from the keywords they used to retrieve the publications. While Cointet and Joly focused on declinations of crowdsourcing, participatory research and citizen science (n=4,640 references [i]), Kullenberg & Kasperowski used a combination of numerous research terms, including public engagement AND science, terms explicitly related to participation in geography, or including the words monitoring/sensing/auditing, street/civic/crowd/citizen science, epidemiology, etc.(n=1,935 references [ii]). Therefore, while Cointet and Joly exclude publications about public engagement in science (the “social-sciences” strand of Kullenberg & Kasperowski), Kullenberg & Kasperowski exclude health and agriculture-related researches (the community-based and participatory research of Cointet and Joly).

These two studies are therefore attempts to widen the landscape of “citizen science” beyond its most common meaning, in order to embrace the diversity of participatory approaches in research. When Rick Bonney, a scientist at the Cornell Laboratory of Ornithology, coined the term ‘Citizen Science’ in 1996, it was to designate environmental data collection launched by research institutions – the common strand found in both studies. The new boundaries offered by these two studies are the result of a complex combination of the authors’ previous knowledge on the subject, iterative queries and analyses of the literature, and the authors’ perception of the audience intended for their study. It therefore comes as no surprise that Kullenberg & Kasperowski acknowledge the existence of social sciences studies in PLoS One (a journal dedicated to any type of sciences), or that Cointet & Joly acknowledge community-based research in a report dedicated to a governmental agency. Both studies can be seen as a way to make visible what is considered invisible in the eyes of their public, based on the supposed objectivity of scientometrics.

Rather than offering yet another painting of what the landscape of public participation in research is, it might be interesting to investigate who self-identifies as a “citizen science” actor in its stricter sense. Who, today, embrace the “citizen science” label? What fields are designated under this umbrella? What are the institutions that use the term? What are those which do not?

How did I proceed?

If the two previous studies differ in what they consider as participatory researches, they nevertheless agree on one main point: that scientometric approaches are insufficient to study participatory researches. As Kullenberg & Kasperowski note, 412 out of the 490 “citizen science” projects they also analyzed (84%) did not produce published papers, because publishing scientific papers is simply not the aim of most participatory projects. Think of community-based research, which mainly intends to challenge expertise or make policy recommendations. Or DIYbio – another “citizen-science” related phenomenon that is absent from both studies. However, the two studies agreed that the field has been rising since the 2000s, in relation with the creation of web-platforms for participatory projects. Twitter, the web-based social media, is an interesting source of data to study who are the “citizen science” actors today. Given that it is a public communication tool, most of its users have public tweets, and additional identities on the web. Twitter can therefore be considered as a good source to identify “citizen science” advocates — defined as users who use “citizen science” in their biographical sketches (bios).

I retrieved the account data and followers of the 445 accounts that use the terms “citsci”, “citizen science” or “citizenscience” (with capital, plural, or singular) in their bios (October 2016). Then I coded manually each account according to 4 variables: their type (individual/organization) and gender (male/female) when relevant; their subtype (e.g. “scientist”, “citizen science project”), and their field of interest or inquiry (e.g. “conservation”, “DIY”). I also built the network of their following/followers’ links (For those of you who are techies: I used the great R package rtweet by Michael W. Kearney for data retrieval, the R package rgexf for network building, Open Office Calc for coding, and Gephi for network visualization. The R script is available on github).

What can we learn on “citizen science” from this analysis?

Result #1: almost no science amateurs identify with “citizen science”.

My dataset is almost equally divided between organizations (43%) and individuals (57%). People who declare that they are interested in “citizen science” are either working in science (62%) or they are part of the outreach sphere (25%): science journalists, writers, educators, etc. Only 3% of the people are not related to science in one way or another (information is missing for the 7% others). Researchers, be they PhD students, post-docs, or faculty, are the most numerous in the dataset. Three-quarters of them belong to fields that organize “citizen science” projects, while another quarter are researchers that study “citizen science” projects: they work in the fields of user experience, design, or gaming; or science and technology studies or education. The discourse about “citizen science” on Twitter is essentially carried by science-related professionals. The label “citizen science(s)” has not been adopted by science amateurs on Twitter.

Result #2: “citizen science” is mostly about data collection for environmental monitoring.

“Citizen science” projects represent more than half of the organizational accounts. Two-third of those projects relate to environment or conservation, 20% to health or DIYbio and 10% to astronomy (Figure 2). Almost the same ratio is found among the scientists that launch citizen science projects. Two-third are conservation or environmental scientists, 20% relate to astronomy or geography and 10% to health or DIYbio. Therefore, only a minority of non-environmental projects use the label “citizen science”.

Figure 2: followers-following links between “citizen science” projects on Twitter (October 2016). One distinguishes a huge component

Figure 2: followers-following links between “citizen science” projects on Twitter (October 2016). One distinguishes a huge component

on biodiversity / conservation / environment questions (top); a small, isolated subset around DIY (mostly DIYbio, right),

a subset of health projects (left), and a subset on the Zooniverse platform (bottom).

Nodes sized according to their number of “citizen science” followers (followers within the dataset).

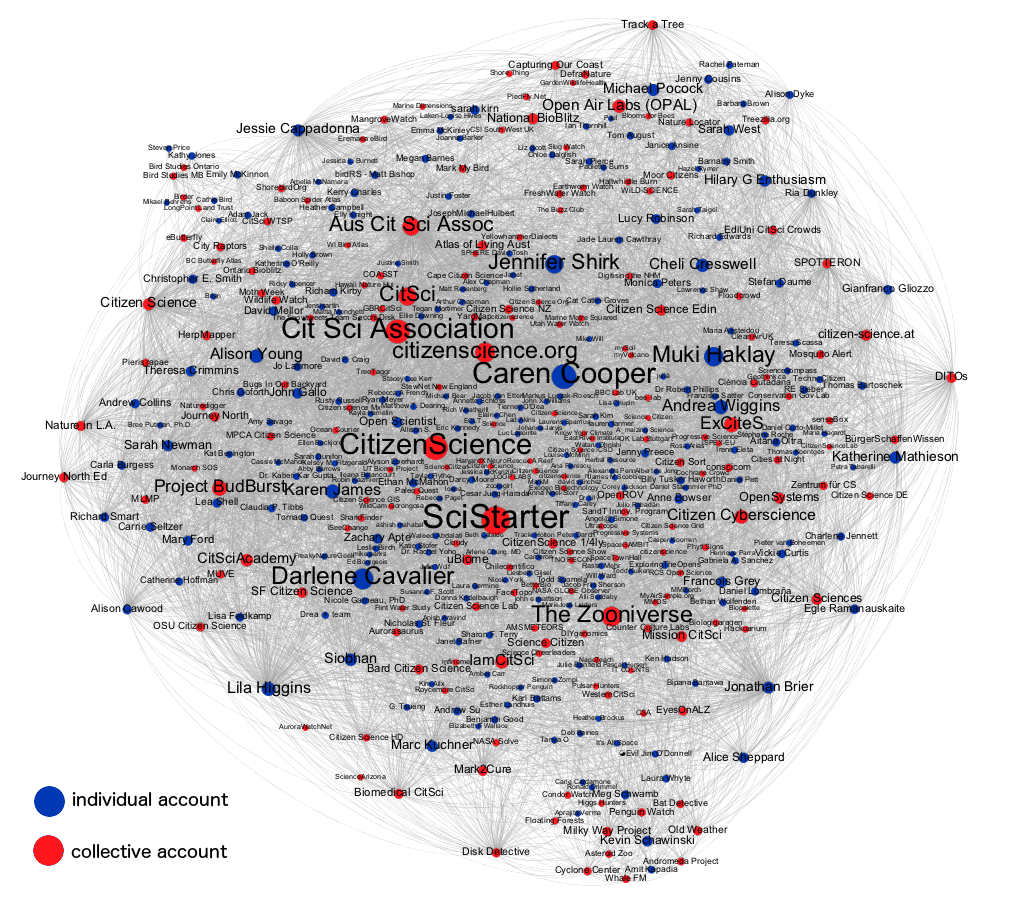

Result #3 Advocates of “citizen science” are mainly women.

The 2016 NSF figures on Science and Engineering workforce show that women constituted only 29% of workers in S&E occupations in 2013, with variations across occupational fields (exceeding 50% in social sciences only). But in my dataset, I found more that 60% of women, among outreach professions (70%) but also among scientists (58%). They are also highly followed: 4 out of 5 individual accounts of the top-10 accounts are handled by women: Caren Cooper (#2, Cornell Lab), CitizenScience by Chandra Clarke (#3), Darlene Cavalier (#5, founder of SciStarter), Jennifer Shirk (#9, Director of Field Development for the US “citizen science” association). The 5th being Muki Haklay (#8, Director of the Extreme Citizen Science group). Caren Cooper and Darlene Cavalier, for instance, have more “citizen science” followers than The_Zooniverse, the account of one of the biggest “citizen science” platforms; and far more followers in general than the U.S. Citizen Science Association. Women therefore play a major role in advocating “citizen science”.

Result #4: “citizen science” is becoming institutionalized.

Another finding of my study — maybe the most obvious — is that all the retrieved accounts are connected, in one way or another, to each other (see the introductory image, Figure 1). Deleting nodes from organizations, or deleting the most followed accounts, does not change this feature of the network. It means that accounts related to “citizen science” form a united community of people following each other on Twitter.

The most followed institutional accounts are community-based platforms and national organizations [iii]. The earliest are community-based platforms: SciStarter (#1, 2009), followed by more than half of the “citizen science” accounts; The Zooniverse (#7, 2009), CitSci.org (#10, 2011). The national organizations promoting “citizen science” were created later: US Citizen Science Association (#4 and #6, 2012), Australian Citizen Science Association (#11, 2014).

This clearly shows a trend towards a greater institutionalization of “citizen science”, mainly through newly created organisations dedicated to its promotion. A new profession emerges, that of “citizen science” coordinator (one quarter of the “outreach” profiles). The professional network Linkedin now displays more than 6,000 profiles with this label. A few companies have also started selling kits or sensors to perform environmental monitoring.

So what? Widening the scope of citizen sciences – a strategic endeavour

There is one main limit to this study, and it is linked to the decision of working with Twitter. It is noteworthy, for instance, that Rick Bonney, who coined the term “Citizen Science”, is absent from my dataset; as well as the ECSA (the European Citizen Science Association, which opened a Twitter account early January 2017). Not everyone uses Twitter. Those who consider CS as a major part of their professional or personal life, but do not have a Twitter account, are therefore invisible in this dataset.

However, now we understand better why Kullenberg & Kasperowski, or Cointet & Joly, chose to widen the scope of “citizen science”. The field’s visibility is rapidly growing, developing through newly created institutions, around a small set of actors who mostly come from scientific institution settings and are concerned with environmental monitoring. But public participation in research also comprises a diversity of approaches, such as action research or patients’ activism, that have been studied by social scientists for decades. It also connects to (deliberative) public engagement in science – another phenomena that has been studied and sometimes promoted by social scientists. From a social science point of view, there is a possibility that the “citizen science” label masks this diversity of approaches. Hence the attempts to widen the scope in order to make visible this diversity to scientists developing them, or to policy makers funding them.

Download the list of accounts with their additional categories (CSV file)

[i] TS=(((“science” OR “scientific” OR “research”) AND (“crowdsourcing”)) OR «crowd science» OR «participative research» OR «participatory research» OR «citizen science»)

[ii] (TS=”citizen science” NOT UT=(…)) OR (TS=(“Community-based monitor*” OR “Community-based envir*”) NOT TS=(“community-based environmental interventions” OR “community-based environmental change intervention” OR “community-based environment.” OR “community-based environments” OR “community-based environmental protest” OR “community based environmental movements” OR “community-based environmental health” OR “community-based environmental education” OR “1-y symptom” OR “43.1-123.0″) NOT UT=(…)) OR (TS=”civic science” NOT UT=(…)) OR (TS=”crowd science”) OR (TS=”civic technoscience”) OR (TS=”community based auditing”) OR (TS=”community environmental policing”) OR (TS=”citizen observatories”) OR (TS=”participatory science”) OR (TS=”volunteer monitoring” NOT UT=(…)) OR (TS=”volunteered geographic information” OR TS=”volun* GIS” NOT UT=(…)) OR (TS=”neogeography” NOT UT=(…)) OR (TS=”participatory GIS” NOT UT=(…)) OR (TS=”street science”) OR (TS=”popular epidemiology” NOT UT=(…)) OR (TS=”public engagement” AND TS=”science” NOT UT=(…)) OR (TS=”participatory monitoring” AND TS=”science”) OR (TS=”participatory sensing” AND TS=”science” NOT UT=(…)) OR (TS=”public participation in scientific research”) OR (TS=”locally based monitoring”) OR (TS=”volunteer based monitoring”)

Love this! Especially the turn toward thinking about the institutionalization/ professionalization of the field. For us, twitter is largely a tool for organizational networking, not for engagement with participants (which plays out on other platforms, primarily email, Facebook, and through our own website). Seeing the prevalence of environmental orgs does help clarify the tension around the term “citizen science.”

Great insights!

Thanks for your comments Rhiannon and Philip.